As AI continues to transform the data center landscape, Vertiv has predicted the 2025 Data Center Trends that we consider to be most impactful in the near future. From innovations in power and cooling to AI integration, energy solutions, and evolving regulatory measures, our experts explore the key technologies driving efficiency and adaptability in a rapidly evolving industry.

How power and cooling can meet AI densification demands

Driven by the rapid surge in data center and high-performance computing (HPC) , power and cooling requirements at the core data center and the edge will have to match the increased demand for data and workloads.

Read More...

The increasing demand for compute-intensive workloads is pushing the capacities and boundaries of existing power and cooling infrastructure. As an industry, data center demand and electricity consumption are projected to double. In 2025, this shift will be further driven by an increased transition from CPU to GPU-based systems, leveraging the parallel computing power and higher thermal design points of modern chips. These ultra-fast chip technologies require innovative solutions and approaches to manage the intensified heat and power requirements.

Shifting from the CPU to the GPU

The transition from CPU to GPU is a fundamental change in how data centers operate. GPUs, with their ability to handle parallel processing tasks more efficiently, are becoming the preferred choice for advanced computing workloads, particularly in AI and machine learning (ML) applications. This preference is placing unprecedented stress on existing power and cooling systems. Traditional power and air-cooling methods are increasingly being challenged to manage the heat generated by these high-performance chips. As a result, data center operators are increasingly turning to advanced solutions for faster deployment and to keep pace with the surging demand such as modular cold-plate and immersion cooling.

[Watch DCD Mission Critical Power Webinar: 2025 Trends and Outlooks on Power and Cooling]

Impact on enterprise data centers and the edge

The adoption of AI is expanding beyond early cloud and colocation providers, reaching into enterprise and edge data centers. AI workloads are characterized by their high variability in power consumption and demand for low-latency processing. Power loads in main data centers can fluctuate from a 10% idle mode to a 150% sudden overload spike.

These drastic fluctuations are driving the need for more advanced and flexible cooling strategies: As AI workloads continue to push rack densities into the three- and four-digit kilowatt ranges, supporting infrastructure must evolve with scalable liquid and air-cooling solutions to manage the increased heat and power demands.

Moreover, integrated infrastructure will be essential for maximizing efficiency. AI factory-integrated liquid cooling is becoming more common, streamlining the manufacturing and assembly processes. This integration speeds deployment, reduces the grayspace, and increases system energy efficiency. By incorporating cooling solutions directly into the server design, manufacturers can create more compact and efficient systems.

As these data centers mature in their respective inferencing phases, the next few years will see the drive to increase these same power and cooling solutions to “the edge of AI.” We believe that inferencing in the edge is going to coexist at some level. It may be embedded somewhere within the network, but particularly further distributed out. In terms of power and cooling, it’s reasonable to expect that it’s going to go up at the edge, but likely not in the same trajectory as the data center or as the cloud itself.

This is still nascent at this point as we related [power] back to how AI will impact [data centers]. A significant amount of inferencing is happening within the main data centers, but we also know that requirement will be there to enable that inferencing at the edge.

Cooling solutions: Cold-plate, immersion, and hybrid

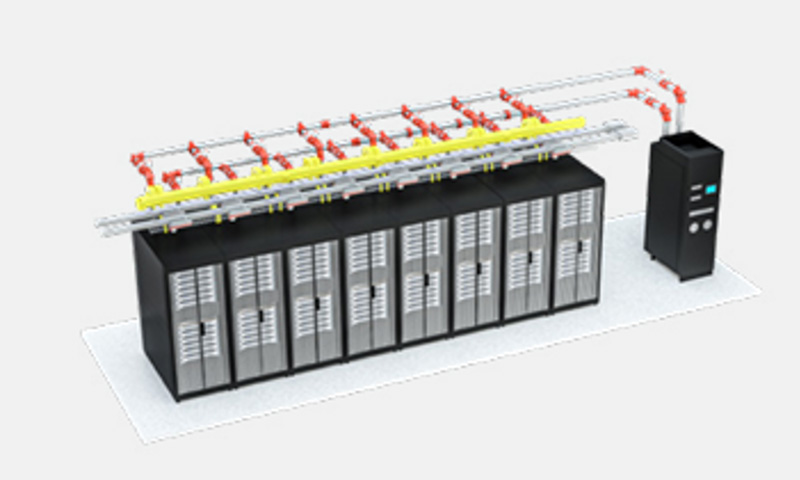

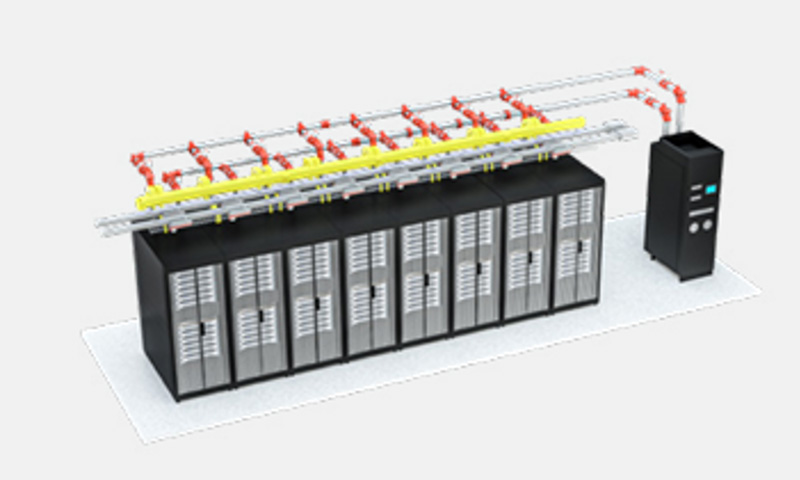

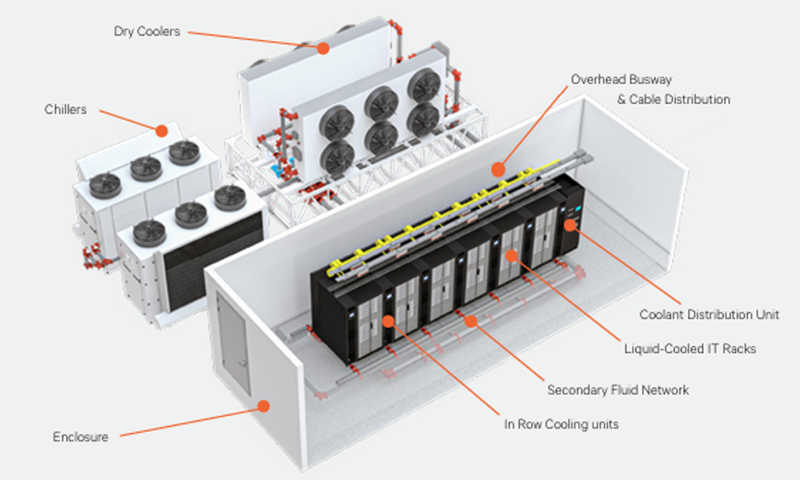

Vertiv™ Megamod™ Coolchip with Vertiv™ Perimeter Cooling

Vertiv™ Megamod™ Coolchip with Vertiv™ Liebert® DCD rack mounted rear door heat exchanger

Vertiv™ Megamod™ Coolchip with Vertiv™ In-row Cooling

Figure 1. Vertiv™ MegaMod™ CoolChip with liquid cooling: This modular solution enables up to 50% faster deployment.

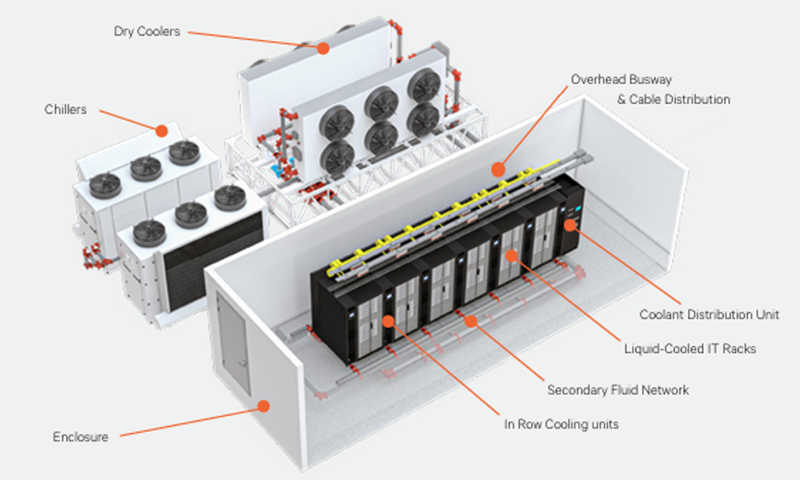

Figure 2. Liebert® XDU coolant distribution units: Designed to meet the thermal demands of high-density AI applications as it enhances energy efficiency and maintains operational reliability.

Cold-plate and immersion cooling are emerging as critical technologies to address the thermal challenges posed by AI workloads. Cold-plate cooling efficiently transfers heat by placing cooling plates directly on the heat-generating components, while immersion cooling submerges hardware into thermally conductive, electrically insulating liquid. Both methods are highly effective at dissipating heat at the rack level, making them ideal for high-density computing environments.

Hybrid cooling systems are evolving to meet the diverse cooling needs of modern data centers. These systems combine liquid-to-liquid, liquid-to-air, and liquid-to-refrigerant configurations, offering flexibility in deployment across different environments. Rackmount, perimeter, and row-based cabinet models are available to cater to both brownfield and greenfield applications. This adaptability is crucial for data centers looking to upgrade their infrastructure without significant downtime or disruptions.

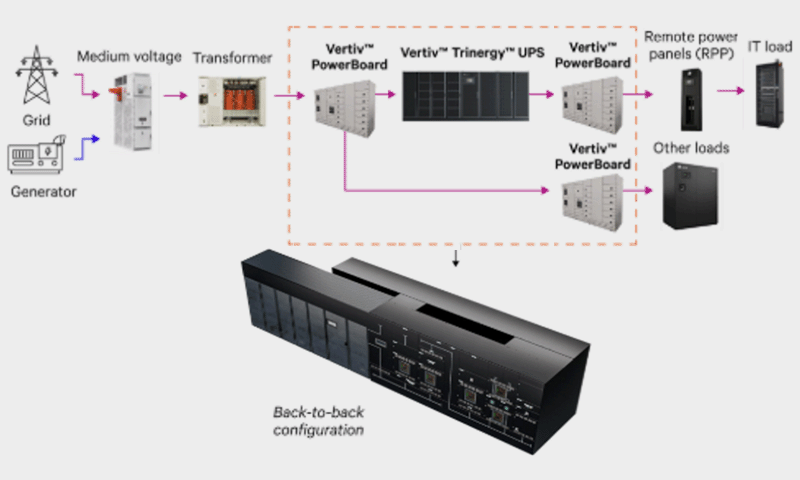

Dedicated high-density UPS systems

As data centers adopt liquid cooling to manage AI workloads dedicated high-density UPS systems. These systems are designed to provide continuous operation, securing that critical workloads remain unaffected by power fluctuations. This integration is essential for maintaining the reliability and efficiency of data centers, particularly those handling AI and other compute-intensive tasks.

Key Takeaway

If 2024 demonstrated anything, it is that AI is here to stay. The data center industry must continue to innovate and adapt as the future of data centers lies in the ability to adapt to the increasing demands of compute-intensive workloads. From innovation in the infrastructure needed to support AI to regulatory initiatives related to its use, we anticipate further acceleration in and around the AI market in the coming year.

The shift from CPU to GPU, the adoption of new cooling solutions, and the integration of advanced UPS systems are just a few of the ways data centers are rising to meet these challenges. While we are still in the early days of how AI will be transforming our industry, its rapid expansion has disrupted our historical linear development. If we take lessons from our experience in the past decades, it is our continued research, learning, innovation, and collaboration with partners that can enable the equal surge and widespread deployment of AI and for us to keep the pace.

Addressing energy availability challenges

As demand for grid power increases across all sectors, data centers are prioritizing energy-efficient solutions and distributed energy resources (DERs) to manage rising demands driven by high-performance computing (HPC) services, AI advancements, and regulatory pressures.

Read More...

Data centers underpin crucial digital services including emerging AI applications. However, their energy consumption is becoming a significant concern. In 2023, data centers consumed about 4.4% of total electricity in the US, which is expected to triple by 2028. This increase in power demand is putting immense pressure on already overextended grids, leading to potential regulatory scrutiny and higher operational costs.

AI applications are particularly power-hungry, requiring substantial computational resources. According to the S&P Global’s Market Intelligence report, with AI evolving and integrating into various industries, the energy requirement vis-à-vis its availability serves as data centers’ main limiting factor as demand is expected to surge. As predicted in 2023, regulators worldwide will continue to focus on reigning in this increased demand through energy allocation mandates and implementing limits.

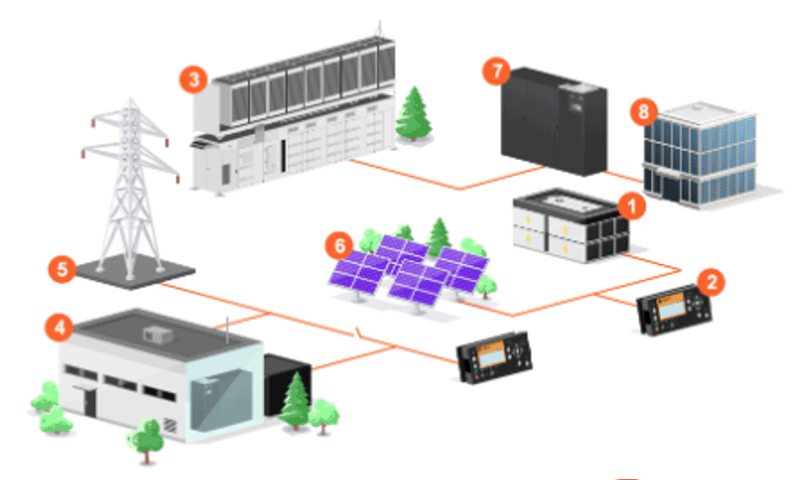

- Vertiv DynaFlex BESS

- Vertiv Controller

- Hydrogen Fuel Cell

- Data Center

- Utility Feed

- Solar PV

- Vertiv UPS

- R&D Lab

Figure 1. The Vertiv™ Dynamic Power energy ecosystem, an example of an always-on microgrid for energy independence behind the meter (BTM).

This year, data center operators will turn to alternative distributed energy resources (DERs) and other energy storage technologies to respond to the demand and drive their respective business goals in the medium and long term. Spending for their respective enterprise and edge data centers, particularly for those sectors offering modern and increased AI services and applications such as Finance and Manufacturing, will be expected to go up to enable undisrupted operations, regardless of the company’s size, the grid’s limited allocations, and to comply with their respective environmental objectives.

Regulatory and environmental pressures

The increasing energy demands of data centers are attracting attention from governments worldwide. Regulatory bodies are considering imposing restrictions on data center builds and energy usage to manage the strain on power grids. Additionally, the environmental impact of higher energy consumption is a growing concern, with data centers contributing to increased carbon emissions. These factors are driving data center operators to seek more energy-efficient solutions.

The drive to energy efficiency

Energy efficiency has always been a priority for data centers, but the current pressures are making it even more critical at the main data center geographic hubs and in the locales where their respective edge infrastructure need the power. There is a significant amount of inferencing happening within the main data centers, but operators and vendors alike also know the emerging requirement to enable that at the edge. As power density goes up, the focus on energy efficiency at the edge will likely be greater because data centers simply don’t have the capacity to put a triple-digit kilowatt data center rack at the edge. With the increased mainstream popularity of generative AI (GenAI) and broad AI, in addition to the expected applicability of high-performance computing further expanding, the risks of underinvesting in its development, infrastructure, and power distribution efficiency are higher than underinvesting. When done right, projections for the return on investment (ROI) potential for AI use cases in companies grow significantly. grow significantly.

Figure 2. The modular design of Vertiv™ PowerNexus enables savings in deployment speed, scalability, and footprint space in the data center. Moreover, its close-coupled integration reduces power loss in removing the distance between the switchgear and the bypass cabinets, lowering operational costs in the process.

According to analyst firm IDC, operators are investing in advanced cooling technologies, energy-efficient hardware, and innovative data center designs to minimize energy consumption. These efforts are essential for reducing operational costs, meeting regulatory requirements, and mitigating environmental impact. Moreover, as AI governance is expected to mature this year, more enterprises and data centers can adopt and strengthen their respective total cost of ownership (TCO) models and decarbonization frameworks.

In response to these challenges, the data center industry is witnessing a shift towards energy alternatives and microgrid deployments. In 2024, there was a notable trend toward exploring these options, and this trend has accelerated in 2025. Data centers are increasingly adopting fuel cells and alternative battery chemistries as part of their microgrid energy solutions. Battery energy storage systems (BESS) offer always-on bidirectional power distribution and supply regardless of peak times, and its reduced transfer times during outages make it a modern, more environmentally responsible alternative to diesel generators.

Looking further ahead, small modular reactors (SMRs) are emerging as a potential solution for large power consumers, including data centers. Several companies are developing SMRs, with availability expected around the end of the decade. These reactors promise to provide a stable and efficient power source, addressing both the energy demands and environmental concerns associated with data centers.

Key takeaway

The energy availability challenges faced by data centers are multifaceted: involving regulatory, environmental, and technological aspects. As AI continues to drive up power demands, data centers must adapt by prioritizing energy-efficient solutions and exploring alternative energy sources. The trends towards microgrid deployments and the development of small modular reactors are promising steps in this direction. By staying ahead of these challenges, data centers can continue to support the digital economy while managing their energy footprint effectively.

The rise of AI Factories as a collaborative effort

Read how the changing requirements of AI factories is changing the way industry players collaborate and work together for technology development, addressing challenges, and finding innovative opportunities for strategy and growth.

Read More...

AI Factories are poised to change how we think about data centers in terms of compute, power, and thermal infrastructure. The industry's average rack densities are projected to operate up to 1,000 kW per rack, a considerable leap in demand compared to the usual 8.4 kW in 2020.

These changes in rack densities and the increasing complexity of AI applications necessitate collaboration among stakeholders to strategize for current needs and future growth. Chip developers, customers, power and cooling infrastructure manufacturers, utilities, and other industry players are coming together to develop and support transparent roadmaps for AI adoption. This collaborative approach is essential to address the challenges and opportunities presented by AI Factories.

Watch: Vertiv & Compass Datacenters collaborate on cooling and maintenance innovations for AI

Changing requirements and developments

AI factories are specialized data centers designed specifically for artificial intelligence (AI) workloads and machine learning operations. Due to the intensive computational demands of AI training and inference, AI factories require significantly more power and cooling capabilities than traditional data centers.

AI factories emphasize high-performance computing (HPC) workloads, particularly those involving massive datasets and complex machine learning algorithms. These facilities include racks of servers equipped with high-capacity graphics processing units (GPUs), tensor processing units (TPUs), or other purpose-built accelerators. The extreme density of these systems necessitates innovative approaches to power distribution, cooling, and operational design.

From a business model perspective, this shift has opened new industry use cases and revenue streams in markets leveraging predictive analytics, natural language processing, and generative AI solutions. Additionally, companies are adopting consumption-based models, aligning computing costs with actual usage, versus traditional server leasing models.

Powered by AI: Development tools, partnerships for integration

Working with the leading chip makers, we know that the hardware for training broad AI requires more advanced power and cooling approaches and technologies compared to non-AI IT loads—but compounded more so with the need to be first for the establishment of AI Factories. This early, we know that the inferencing will require as much as 10 times the requirements in the training phase, more so at the edge. Put simply, if operators deploy 10 GW for training, inferencing will require up to 100 GW in distributed locations—and where most of the new opportunities for business, development, and cloud/on-premises data repatriation can be found.

Collaborating on solutions is particularly crucial as AI factory demands drive a need for seamless IT and infrastructure integration. For example, chip designers are now working directly with cooling manufacturers to optimize server and rack configurations. Meanwhile, data center developers and AI customers are co-developing solutions tailored to particular workloads, offering flexibility without compromising performance.

These alliances reflect an acknowledgment that no single entity can address these challenges alone, and all parties are navigating a landscape that has yet to be standardized. As an industry, this collective approach facilitates cost-sharing, innovation acceleration, and reduced risks for all parties involved. Furthermore, collaborative efforts around standardization will empower faster deployment and scalability, ensuring that AI Factories meet the demands of tomorrow's game-changing AI technologies.

Vertiv™ Megamod™ Coolchip with Vertiv™ Perimeter Cooling

Vertiv™ Megamod™ Coolchip with Vertiv™ Liebert® DCD rack mounted rear door heat exchanger

Vertiv™ Megamod™ Coolchip with Vertiv™ In-row Cooling

Figure 1. Vertiv developed three Vertiv™ MegaMod™ CoolChip concept designs to support direct-to-chip liquid cooling solutions. They are differentiated in how they address the air-cooled architecture portion. Each air-cooled architecture has benefits, from flexible perimeter cooling to room-neutral in-row cooling and space-saving rear-door heat exchangers.

Watch: Vertiv™ MegaMod™: Scalable modular data center solutions for medium to large data centers

Taking the next steps

The development of AI Factories represents a significant milestone in the evolution of data centers and computing power. The unprecedented increase in rack densities and the growing complexity of AI applications require a collaborative approach among industry players. Stakeholders can create transparent roadmaps, innovative solutions and end-to-end collaborative reference design to drive AI adoption by working together. As we move forward, the integration of IT and infrastructure through manufacturing partnerships will be key to unlocking the full potential of AI factories.

How AI is transforming data center threats and security

AI amplifies both threats and security demands. As attacks grow smarter, monitoring and adaptive defenses provide the necessary evolution in protection. Vertiv’s end-to-end solutions protect data centers where they’re most vulnerable, blending physical data center security with cyber vigilance. This integrated approach safeguards assets from facility perimeters to server racks while maintaining continuous compliance.

Read More...

AI is improving data security but also enabling more sophisticated attacks. Staying ahead requires unified, adaptive defenses built for an evolving threat landscape. As AI adoption accelerates (driving trends like AI factories and soaring rack densities), the use of AI brings significant changes across industries and infrastructures, it also contributes to the increasing complexity of advanced threats and calls for cybersecurity resilience.

AI-driven cyberattacks

While organizations use AI to bolster defenses, cybercriminals now deploy AI-driven tools to execute more sophisticated, targeted attacks at scale.

And the results are alarming: ransomware grows more destructive as AI automates vulnerability scanning and precision targeting of legacy systems. Meanwhile, self-adapting malware evolves to circumvent traditional defenses entirely. IoT devices, industrial control systems, and supply chain components are often weak links in secure networks.

This new generation of threats demands equally intelligent countermeasures. As AI-powered attacks learn and evolve in real-time, security protocols can no longer rely on static defenses. The battle has shifted to adaptive systems capable of anticipating, not just reacting to these dynamic threats.

Protecting physical and digital infrastructure

Data center security secures physical and digital infrastructure against threats and attacks on the uptime, daily operations, applications, and data. The attack surface is getting wider than ever due to the growing number of technologies and services companies offer. the growing number of technologies and services companies offer.

Data center security best practices focus on multiple layers of protection:

Protecting the property boundaries The first line of defense involves physical barriers and monitored perimeter zones. These deter intrusions while systems track external activity and minimize false alarms. Implement a system for secured areas, monitor activity outside, and minimize false alarms.

Managing facility access Manage both permanent and temporary access through credential verification and activity monitoring at entry points. Automate privilege tracking and compliance workflows.

Interior access control Balance staff mobility with strict security: monitor movements, enforce least-privilege access, and deploy layered protections. Unified dashboards integrate door locks, biometric authentication, alarm systems, and CCTV monitoring while tracking power and environmental status in real-time.

Securing critical infrastructure Operational servers demand maximum protection. Redundant locks, emergency sensors, and IoT monitoring maintain uptime. Private networks, unified dashboards, and automated logging safeguard server rooms and cabinets—ensuring continuous processing while tracking all activity.

Fostering a security-first culture While employees remain the most vulnerable security link, organizations can strengthen defenses by enforcing least-privilege access, maintaining adaptable security protocols, and conducting regular cybersecurity training to keep staff alert to evolving threats.

Multi-layered defense

In addition to implementing advanced security technologies, extreme diligence is essential in maintaining a robust cybersecurity posture. This involves continuously monitoring the network for potential threats, conducting regular security assessments, and staying informed about the latest developments in cybersecurity.

While AI offers powerful tools for enhancing cybersecurity, defense-in-depth is a multi-layered approach that involves implementing multiple security measures at different levels of the network to protect against a wide range of threats. Key components of a network defense-in-depth strategy include:

Firewalls and sandboxes Firewalls act as the first line of defense and help block unauthorized access to the network. Meanwhile, sandboxes act as isolated virtual systems where the initial routines of an attack can be triggered, especially for automated malicious deployments.

Intrusion detection and prevention systems (IDPS) These systems monitor network traffic for suspicious activity and can automatically block potential threats.

Endpoint security Protecting individual devices within the network, endpoint security solutions help prevent malware and other malicious software from spreading.

Encryption Encrypting sensitive data facilitates protection in that even if it is intercepted, it cannot be read without the proper decryption key.

Regular updates and patching Keeping software and systems up to date helps protect against known vulnerabilities.

The AI security imperative

AI amplifies both threats and security demands. As attacks grow smarter, monitoring and adaptive defenses provide the necessary evolution in protection. Vertiv’s end-to-end solutions protect data centers where they’re most vulnerable, blending physical data center security with cyber vigilance. This integrated approach safeguards assets from facility perimeters to server racks while maintaining continuous compliance.

Discover how to harden your infrastructure: Vertiv™ Data Center IT Infrastructure Security Solutions

Government and regulatory focus on energy use and impact

Learn how the changing AI data centers’ requirements and energy use will become a focal point for discussions on development, regulation, mandates, and environmental goals.

Read More...

How the surge in AI data center resource consumption is driving tighter regulations and efforts toward enhanced efficiency.

Today’s landscape demands equal attention to direct AI governance. Governments are shifting from pure sustainability mandates to comprehensive governance frameworks addressing AI’s ethical use, data sovereignty, and infrastructure impact—creating a dual compliance challenge for operators.

Intensifying focus on energy, resources, and environmental impact

AI's computational intensity makes data centers prime targets for regulations addressing sustainability and resource consumption. This is no longer just about industry metrics such as power usage effectiveness (PUE); regulators are scrutinizing the total environmental footprint

Expect stricter mandates on carbon emissions reporting and reduction, energy efficiency standards (beyond PUE, potentially including compute efficiency metrics like Performance/Watt), water usage effectiveness (WUE), and responsible e-waste management.

As highlighted by consulting firm Deloitte's analysis of global AI compliance, operators must simultaneously address fairness, privacy, safety, transparency, competition, and accountability – often with conflicting requirements across jurisdictions. A one-size-fits-all compliance strategy is ineffective.

Sovereign AI and divergent regulatory paths

A dominant trend is the global push towards "sovereign AI" – a nation's strategic effort to control the development, deployment, regulation, and utilization of AI infrastructure, workforce, technologies, and associated data. This drive is often accompanied by data and cloud repatriation trends at national and corporate levels.

This landmark legislation establishes a risk-based framework across the EU. AI systems are categorized by potential harm (unacceptable, high, limited, minimal risk), with stringent requirements like rigorous testing, transparency, human oversight, and data governance imposed on high-risk applications (e.g., critical infrastructure, employment, biometrics). Its emphasis is on mitigating risks while fostering trustworthy innovation.

China prioritizes centralized control, tightly integrating AI development with national security and social stability goals. Frameworks like the Cybersecurity Law (CSL) and AI Safety Governance Framework emphasize data security, ethical AI use, and alignment with state objectives.

The US currently lacks a unified federal AI law. Instead, a patchwork of state-level regulations is emerging, reflecting a broader strategy favoring sector-specific innovation and maintaining technological dominance. This creates significant complexity for operators serving multiple states.

Enforcement consistency and stringency will vary widely. Expect more countries to implement their own sovereign AI mandates, focusing initially on high-impact sectors like healthcare, finance, autonomous vehicles, and environmental applications. The core challenge for operators is navigating this complex, divergent global landscape.

Strategic compliance: Turning mandates into opportunity

Viewing governance solely as a regulatory burden is a missed opportunity. Forward-thinking operators treat it as a strategic necessity for risk mitigation, investor confidence, data protection, and long-term resilience. Here’s your actionable roadmap:

-

Establish agile AI governance frameworks

Integrate legal and compliance expertise early in AI project planning and implementation. Define precisely what data you collect, how AI models are trained and inferenced using this data, and where it's stored. Ensure organizational procedures are explicitly designed to comply with relevant laws (GDPR, EU AI Act, CSL, CCPA, etc.) from the outset. Map data flows meticulously.

-

Implement "least data, least privilege" and fortify security

Adopt the principle of collecting only the minimum sensitive data essential for specific business operations. Internally, enforce strict access controls based on the "least privilege" principle – personnel access only the data critical for their specific role. Deploy layered, redundant cybersecurity defenses (encryption, MFA, ZTNA, continuous monitoring, regular audits) to protect data integrity and meet stringent regulatory requirements for AI systems and their training data.

-

Adopt a risk-based compliance approach

Classify your AI workloads and applications based on their inherent risk level (e.g., customer-facing credit scoring = high risk; internal logistics optimization = lower risk). Apply the corresponding regulatory requirements rigorously. Implement continuous monitoring to detect and mitigate risks related to bias, fairness (especially in training data and model outputs), explainability, and transparency throughout the AI lifecycle.

-

Proactive industry collaboration and engagement

Actively participate in industry forums (e.g., Open Compute Project, ISO/IEC standards committees, ASHRAE, regional data center associations) and working groups focused on AI and sustainability. Collaborate with peers, technology vendors, and even regulators to share knowledge, best practices, and resources.

-

Invest in critical digital infrastructure and unified operations

Prioritize upgrades that enhance efficiency and reduce environmental impact. This includes deploying high-efficiency power systems, advanced cooling solutions, intelligent power management software, and renewable energy integration. Crucially, implement a secure, unified monitoring platform to gain real-time, holistic visibility into energy consumption (PUE, CUE, WUE), environmental conditions, and equipment performance across your entire infrastructure. This enables:

- Proactive optimization for peak efficiency.

- Accurate reporting for compliance (energy, carbon, water).

- Rapid incident response.

- Data-driven capacity planning and infrastructure decisions.

- Scalability to adapt to future regulations and business needs. Design new facilities and retrofits with modularity and scalability in mind to accommodate evolving AI workloads and efficiency mandates cost-effectively.

Stay ahead of the regulatory curve

AI and energy regulations demand more than box-ticking. Operators who bake governance into operations, deploy smart critical digital infrastructure, and collaborate proactively won’t just meet mandates—they’ll unlock efficiency gains and investor confidence.

Ready to transform compliance into an advantage? Discover how Vertiv’s monitoring and infrastructure solutions keep you ahead of evolving regulations in the AI era.

Trends

01

How power and cooling can meet AI densification demands

02

Addressing energy availability challenges

03

The rise of AI Factories as a collaborative effort

04

How AI is transforming data center threats and security

05

Governments, regulators keen on data centers’ energy use, applications

Additional Resources

Data center trends 2025: Vertiv predicts industry efforts to support, enable, leverage, and regulate AI

Unleash your AI evolution with Vertiv

Dynamic power