From managing fluids to handling medium voltage power, liquid-cooled data centers demand a new level of service expertise.

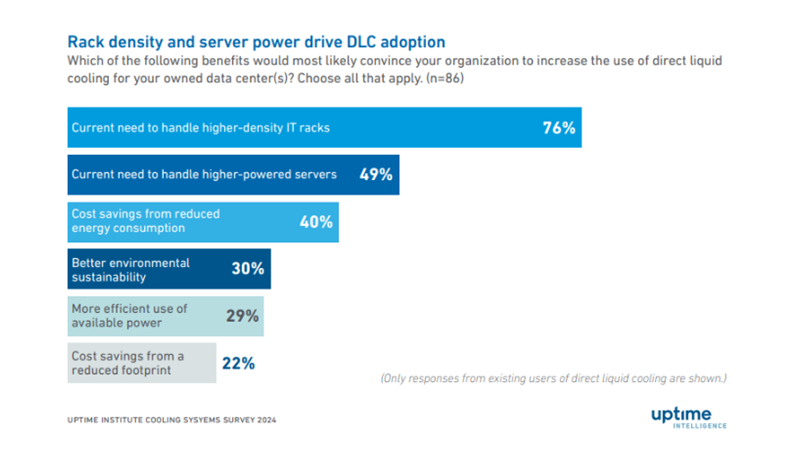

Artificial intelligence (AI) and accelerated computing are pushing data center racks to higher power densities, prompting a shift toward more advanced thermal management. In a 2024 Uptime Institute survey, 76% of respondents pointed to higher-density IT racks and 49% cited higher-powered servers as key drivers for adopting direct liquid cooling (DLC).

Figure 1. Key drivers influencing direct liquid cooling (DLC) adoption in data centers

This trend introduces new operating conditions for service teams. Fluid management, medium-voltage safety, IT and Facilities coordination, and specialized skill sets are now critical to maintaining reliability in liquid-cooled environments. Liquid cooling systems rely on closed-loop circulation, thermal heat transfer components, and tightly controlled flow rates. These elements require precise handling to prevent fluid contamination, maintain performance, and coordinate power and cooling for optimal efficiency and reliability.

The following five challenges highlight why specialized service support is essential for liquid-cooled infrastructure:

1. Managing liquid cooling technology in high-density environments

The integration of liquid cooling and AI chips in data centers has made protecting high-value racks more critical than ever. Contaminants from inadequate filtration can clog cold plates, reduce heat transfer, and trigger unexpected shutdowns. The impact can be severe: lost workloads, emergency repairs, and potential equipment damage.

Experienced service teams should implement comprehensive training programs to equip engineers with essential skills for preventing contamination and managing liquid cooling systems. Advanced filtration mechanisms and real-time monitoring tools are vital to maintaining fluid cleanliness and detecting contaminants before they cause damage.

Read more: Deploying Liquid Cooling in Data Centers: Installing and Managing Coolant Distribution Units (CDUs)

Learn about coolant distribution units (CDUs), their strategic placement, and effective management for implementing liquid cooling in new or existing data centers.

2. Safety concerns with medium voltage power

The integration of medium voltage (MV) power systems in high-density, liquid-cooled data centers brings unique safety challenges. MV environments create arc flash hazards and demand a level of electrical competence most data center staff haven’t historically needed.

Working with higher voltages requires enhanced awareness and protective gear to guard against arc flashes. This level of complexity can introduce stress, slow response times, and lead to inefficiencies if personnel are not equipped with the proper tools and knowledge.

Professional service teams address these challenges through:

- Comprehensive certification programs: Specialized training in MV environments, including arc flash prevention and electrical safety.

- Protective gear: Equip teams with arc-rated suits, gloves, and face shields to mitigate risks.

- Clear safety protocols: Standardized workflows with mandatory safety checks reduce accidents and enhance compliance.

3. Uptime and reliability demand

Maximizing uptime starts with proactive maintenance and a resilient system design. Service teams can leverage predictive analytics to monitor fluid levels, watch for component wear, and track environmental conditions in real time.

Routine inspections are essential in detecting vulnerabilities before they escalate into disruptions, while well-developed escalation protocols empower staff to respond quickly and keep downtime to a minimum. With a reliable maintenance framework, organizations can meet uptime demands and operational excellence standards.

4. IT-facilities coordination

Liquid cooling creates hard dependencies between IT systems and facilities infrastructure. Flow rates, coolant quality, and CDU performance all impact rack-level thermal performance. This blurs historical boundaries and demands tighter collaboration.

Without coordination, simple miscommunications like changing coolant flow rates without informing IT or adding racks without notifying facilities can lead to overheating or downtime.

Service teams establish reliable collaboration through:

- Regular joint training sessions to build cross-departmental understanding.

- Shared dashboards and monitoring tools that provide both teams with real-time visibility of system performance.

- Clear communication protocols for maintenance and system changes.

5. Skill gaps and training

Working with dielectric fluids, heat transfer fluids, and cold plates takes more than basic know-how. It requires a solid grasp of system mechanics, safety protocols, and best practices. Without it, mistakes can lead to reduced performance or even workplace incidents.

Professional service teams partner with industry experts to deliver targeted training and certification programs. Hands-on workshops and simulations help technicians gain confidence and apply skills in real-world scenarios. Ongoing learning keeps teams current with the latest tools, trends, and safety standards, reducing risks and maintaining a competitive advantage.

Read more: Chill factor: Top liquid cooling considerations for high-density environments

As AI applications drive the growing demand for high performance computing, servers are packing more processing power to handle increasingly complex and data-intensive tasks. This increased power results in higher heat generation, pushing the limits of traditional air cooling, especially in high-density rack environments.