You are likely aware of the industry buzz and discourse on generative artificial intelligence (AI) and the impact on our data center industry.

Perhaps you have read an article about it, or even read through the AI infrastructure demands outlined in the April 17, 2023 issue of DCD’s magazine. The wave of demand that AI will likely generate will push compute densities even further past what has been forecasted.

With the significant amount of computational power that AI requires, the power consumption of the next generation of hardware will in turn create significant amounts of heat. This heat causes performance issues and can lead to IT device hardware failure if it is not cooled within operating limits.

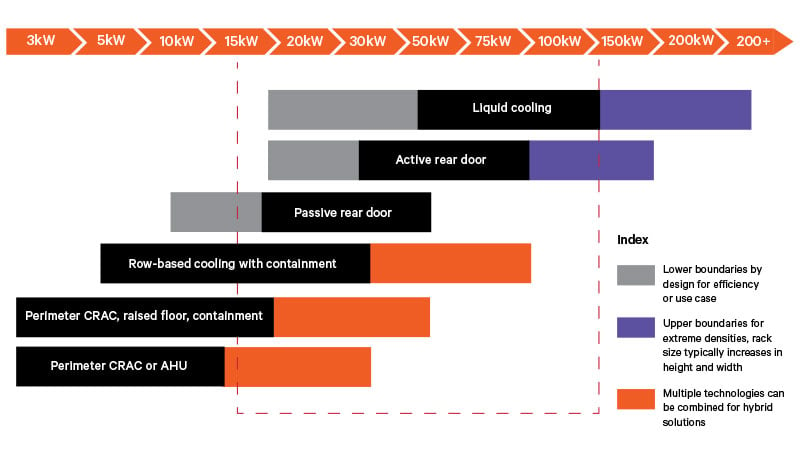

For those facility operation teams deploying high-density solutions for any emerging tech, be it AI, or low latency streaming and gaming, tackling the thermal management challenge is paramount.

When looking at the infrastructure required for deploying the high-performance compute that generative AI demands, we find liquid cooling systems offer a compelling case as the premier solution for addressing the high-heat problem that air-cooling cannot efficiently manage.

According to the Dell’Oro Group, the liquid cooling market revenue will approach $2 billion by 2027 with a 60% CAGR for the years 2020 to 2027 as organizations adopt more cloud services and use artificial intelligence (AI) to power advanced analytics and automated decision making and enable blockchain and cryptocurrency applications.

With AI now a trending topic among the average consumer, companies looking at including emerging technology in their operations will benefit from reviewing the liquid cooling options available today that can scale for tomorrow.

The implementation of liquid cooling deployments for AI computing devices requires the adoption of innovative rack systems specifically designed to accommodate and efficiently manage liquid cooling infrastructure. These rack systems are typically fitted with solutions such as a rear door heat exchanger (RDHx).

Taking up zero additional floor space in the data center, a RDHx is a great option to introduce liquid cooling architecture into the data center without overhauling the entire white space.

These heat exchangers are offered in configurations using different cooling mediums: refrigerant-based, chilled water, and glycol, each cooling medium has their own respective performance differences.

Refrigerant-based mediums have excellent thermal conductivity, allowing them to effectively transfer heat away from components, resulting in improved cooling efficiency. They also have a high heat capacity, meaning they can absorb large amounts of heat before reaching saturation, ensuring consistent cooling performance even under heavy workloads.

Chilled water systems also offer scalability, as they can be designed to handle varying heat loads and accommodate future expansion. Additionally, chilled water-based systems can leverage existing infrastructure, such as cooling towers or heat exchangers, resulting in cost savings and improved energy efficiency.

While Glycol has excellent heat transfer properties, allowing it to efficiently absorb and dissipate heat from the components it comes into contact with. Additionally, glycol has a higher boiling point compared to water, reducing the risk of coolant evaporation and system overheating.

Additionally, the setup for an RDHx will use either passive or active cooling fans to draw the air through the heat exchange coil.

Introducing this technology into the data center also offers a ‘room-neutral cooling solution,’ meaning the air temperature exiting the RDHx is near the ambient room temperature, putting less strain on your perimeter cooling units.

A RDHx is a great solution for adding higher-density racks into a data center landscape that is looking to scale into liquid cooling. Starting with a passive rear door today can help you scale for tomorrow when your densities increase.

RDHx solutions also offer a gateway into liquid cooling, but many organizations are looking for a more focused solution for their clusters. When looking into the configuration options for a non-retro-fitted liquid cooling deployment, the focus falls on two main approaches: immersion cooling and direct-to-chip cooling.

Direct-to-chip liquid cooling involves a design focused on direct coupling of a cold plate to the high heat components, CPU, GPU and in some cases memory modules and power supplies.

Direct-to-chip cold plates sit atop the board’s heat-generating components to draw off heat through either single-phase cold plates or two-phase fluids. These cooling technologies can remove about 70-75 percent of the heat generated by the total equipment in the rack, leaving 25-30 percent that can be readily removed through air-cooling systems.

Immersion cooling is another version of liquid cooling technology that can remove 100 percent of the heat to liquid. There are complexities when dealing with dielectric fluids and it is a radically different cooling approach than the traditional air-cooling method for operational teams to manage.

Liquid Cooling Versus Air Cooling: How Thermal Management Systems are Evolving

There are, of course, some challenges associated with liquid cooling. The main concern is the risk of leaks or other failures that could cause damage to the critical hardware. However, with careful design and well thought-out implementation, these risks can be minimized, and the benefits of liquid cooling can be effectively harnessed.

Data center operators must be ready to turn to liquid cooling to stay competitive in the age of generative AI. The benefits of liquid cooling, such as enabling higher efficiency, greater rack density, and improved cooling performance, make it an essential approach for organizations that want to incorporate cutting-edge technologies and meet the cooling needs of the resulting high-density workloads.

For what’s next in liquid cooling, read the Cooling Transformation eBook from DCD and Vertiv.