Innovations Enabling Reduced Environmental Impact

Data center operators today have access to best practices and technologies that weren’t available five or 10 years ago. Here are some of the newer technologies that are being deployed or planned to increase asset utilization, maximize efficiency, reduce emissions, and minimize water consumption.

Intelligent Power Management

More intelligent equipment and new controls are enabling data center operators to improve the utilization and efficiency of the critical power systems that are required to achieve high levels of data center availability.

One strategy is using the overload capacity designed into some UPS systems to handle short and infrequent demand peaks rather than oversizing equipment based on these peaks. This capability is also being used to support new N+1 redundancy architectures. These architectures use the overload capacity, combined with workload segmentation based on availability requirements, to enable higher UPS utilization rates while maintaining redundancy.

The efficiency of UPS systems is being enhanced through more sophisticated implementations of “eco mode” operation. In eco mode, a double-conversion UPS system operates in bypass mode when the utility is delivering acceptable power, eliminating the energy required to condition power, and switches to double-conversion mode when power quality degrades. In traditional eco mode executions, the switch between modes can create voltage variations and harmonics, which has limited adoption. A new approach, Dynamic Online Mode, keeps the output inverter active but delivering no power while the UPS is in bypass. This enables the efficiency of eco mode while minimizing the risks related to the switch between bypass and double-conversion mode.

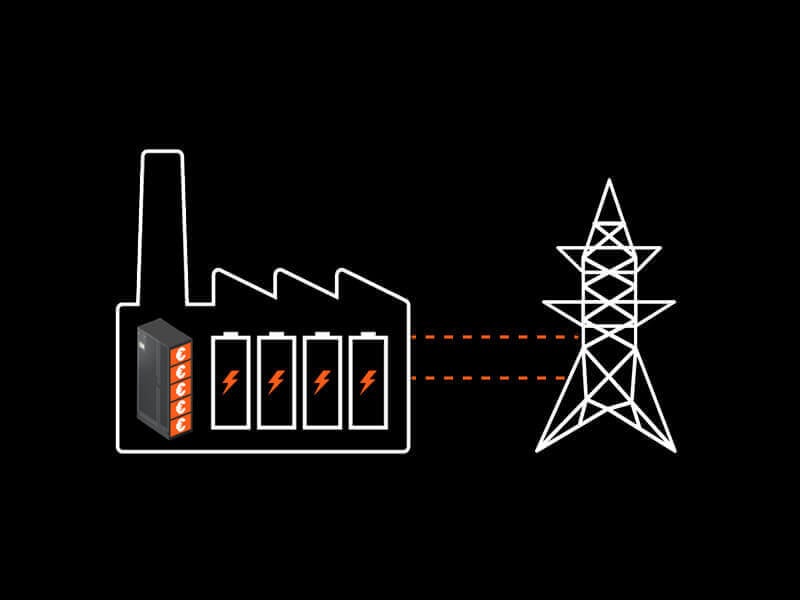

Some UPS systems also now feature dynamic grid support capabilities. These solutions could play a role in decarbonization by facilitating the transition to green energy. They provide on-site energy storage that can be used to compensate for the unpredictability of renewable energy sources and to sell excess energy back to the grid.

Renewable Energy

Renewable energy represents a powerful tool in reducing carbon emissions. There are multiple tactics being deployed to leverage renewable sources, including PPAs and RECs, and migrating loads to cloud or colocation facilities that have made the commitment to carbon-free operation.

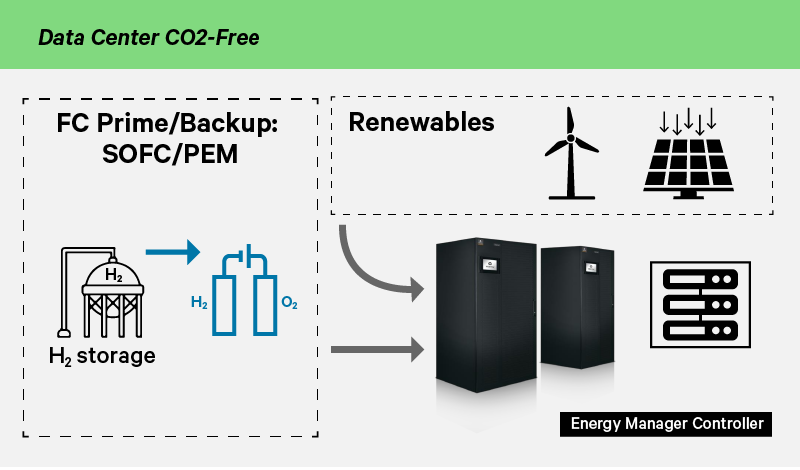

Utilities are expected to be limited in their ability to directly support data centers with 100% renewable power for the foreseeable future. That is leading some operators to explore opportunities to power data centers through locally generated renewable power. This could be accomplished by matching renewable energy sources with fuel cells, systems that can produce clean hydrogen from renewable energy and UPS systems with dynamic grid support capabilities.

Here’s how it could work. Excess wind or solar energy generated on site is used to power hydrolyzers that generate clean hydrogen that supports fuel cells. Renewable energy powers the data center during times when production capacity is high enough to do so. When the sun stops shining or the wind isn’t blowing and energy production drops, the fuel cells power the data center. When the hydrogen fuel is depleted, the UPS switches the data center to the grid to maintain continuous operations.

Lithium-ion Batteries

Lithium-ion batteries are becoming more cost competitive with the valve-regulated lead-acid (VRLA) batteries that have traditionally been used as a source of short-term backup power in data centers. The longer life provided by lithium-ion batteries can mean improved reliability and results in fewer battery replacements, reducing e-waste. Lithium-ion batteries could also play a role in the transition to renewable energy. When paired with UPS systems with dynamic grid support capabilities, they provide the flexibility to sell excess energy back to the grid, enabling operators to realize incremental revenue from on-site power generation.

Water and Energy-efficient Thermal Management

Thermal management systems are usually the largest contributor to data center PUE, and significant resources have been deployed to drive down their impact on PUE by using more energy-efficient cooling technologies. This resulted in increased use of water-intensive cooling systems that increase cooling efficiency by using water to expand the number of hours the cooling system can operates in free-cooling mode.

Chilled water free-cooling systems achieve a balance between water utilization and energy efficiency. These water-efficient systems can reduce indirect emissions when supported by optimization strategies such as increasing air and water temperatures, system-level control, and the addition of adiabatic technologies.

For areas where water availability is limited or where operators have established a goal of zero water use as part of their sustainability goals, water-free direct expansion (DX) systems can be employed. DX free-cooling systems come close to delivering the energy efficiency of indirect evaporative systems while eliminating the millions of gallons of water used by those systems. In a direct comparison of the two systems, DX systems with pumped refrigerant delivered a pPUE about .01 higher than the indirect evaporative system while reducing water-usage effectiveness from .25 for the indirect evaporative system to zero for the DX system.

Liquid Cooling

The growing use of artificial intelligence and other processing-intensive business applications is requiring more data centers to support IT equipment racks with densities of 30 kilowatts (kW) or higher. This trend can limit the ability to optimize efficiency when air cooling technologies are used for heat removal because air cooling becomes less efficient as rack densities increase.

These solutions can improve the performance of high-density racks by eliminating or minimizing thermal throttling of clock speeds while potentially lowering data center energy costs compared to supporting the same high-density racks with air cooling. Liquid cooling technologies and supporting infrastructure are being integrated into existing air-cooled data centers, being used in fully liquid-cooled high-performance computing (HPC) data centers, and enabling high-capacity, fully contained liquid cooled data center modules for edge computing. Three types of liquid cooling are being deployed.

Rear-door heat exchangers bring liquid to the back of the rack rather than directly to servers and are a proven approach for leveraging the high thermal transfer property of fluids to cool equipment racks. They replace the rear door of the rack with either a passive or active liquid heat exchanger. In a passive design, server fans push air through the heat exchanger and the cooled air is then expelled into the data center. In an active design, fans integrated with the heat exchanger supplement the airflow generated by server fans to support higher capacity cooling.

This technology takes water or refrigerant directly to the server to remove heat from the hottest components such as CPUs, GPUs and memory. Direct-to-chip cooling extracts about 80% of the heat from the rack, while the remaining heat is handled by the data center cooling system.

This technology submerges compute equipment in a non-conductive fluid. Server fans are not required because the liquid is in direct contact with the equipment, so this approach requires no support from air-cooling systems. The Open Compute Project (OCP) has released updated immersion cooling requirements to help operators define requirements for immersion cooling projects and ensure the safety of liquid cooling deployments.

Real-time Visibility

Greater visibility into operations often enables more precise control. One example is the use of temperature sensors to monitor data center operating temperatures. In the past, data centers were often operated at temperatures of 72 degrees Fahrenheit or lower to ensure equipment across the data center was operating at safe temperatures.

With real-time visibility into data center temperatures, operators can increase temperatures closer to the upper limit of the American Society of Heating, Refrigerating and Air-Conditioning Engineers (ASHRAE) guidelines of 64.4-80.6 F for IT equipment. Not every facility will be comfortable operating close to the upper limit, but there is often an opportunity to raise temperatures and every degree that temperatures are increased can create as much as 4% energy savings.

Leveraging Technology to Reduce Impact

The technology solutions a particular facility will employ to reduce environmental impact will depend on defined goals and priorities, the age of existing systems, and budgets. In most cases, newer technologies will be phased in as existing systems age, and plans will need to evolve as new technology-based solutions emerge.

Resources