What does it take to support gigawatt-scale AI? Vertiv and NVIDIA offer a blueprint with the GB300 NVL72 at its core.

Delivering on the promise of AI depends on continued innovation around large language models including agentic AI, as well as advances in GPU design. But it also requires closer alignment between AI models, IT hardware and the facilities infrastructure that supports it from a power and cooling perspective.

Martin Olsen, VP, segment strategy and deployment for data centers at Vertiv, focuses on the touch points between IT and OT related to AI and works very closely with key partners such as NVIDIA. Martin recently discussed Vertiv’s ongoing collaboration with AI-leader NVIDIA on a number of fronts including the recently released GB300 NVL72 reference design, aimed at gigawatt-scale AI deployments. This solution supports rack densities of up to 142 kW while leveraging NVIDIA Omniverse for AI-driven simulations.

How would you describe Vertiv’s partnership with NVIDIA, particularly in the context of AI infrastructure development?

Martin Olsen (MO): Our partnership with NVIDIA is foundational, not just strategic. It allows us to stay one GPU platform ahead, giving us visibility into the next two to three generations of compute. This foresight prepares our infrastructure solutions for future compute deployments. We’re a key player in NVIDIA’s ecosystem, covering critical pillars like power, cooling, thermal controls, and AI optimization. By integrating our expertise, we enable NVIDIA to deliver their DGX platform, including the GB300 NVL72, faster and at scale, meeting customer expectations while planning for future needs.

What makes Vertiv a valuable partner for NVIDIA in this collaboration?

MO: NVIDIA values our long history of credibility and servicing major global customers, which lends trust to our partnership. We offer the industry’s most complete power and cooling portfolio, reducing complexity for NVIDIA by minimizing infrastructure interfaces. Our heavy investment in engineering, research, and development drives innovation, addressing complex data center challenges. This end-to-end capability, combined with our innovative approach to power, thermals, and controls, creates a symbiotic relationship that enhances NVIDIA’s ability to deploy advanced AI systems like the GB300 NVL72.

One GPU platform ahead: As Martin Olsen notes, staying ahead means looking beyond the current generation of compute. Data center leaders who gain early insight into next-gen platforms are better positioned to design infrastructure that’s ready for what’s next.

Why are reference designs important in your work with NVIDIA, and how do they benefit customers?

MO: Reference designs are a cornerstone of our collaboration with NVIDIA, acting as a gold standard for deploying accelerated compute like the GB300 NVL72. They provide a complete outline of infrastructure specifications, bill of materials, and performance requirements to support NVIDIA’s DGX platforms and SuperPOD clusters. These designs allow speed, scale, energy efficiency, and serviceability. They’re not static; we iterate on them in a closed-loop feedback system with customers and partners, continuously improving performance, cost, and deployment flexibility. This evolving blueprint simplifies infrastructure deployment for customers, offering agility and efficiency.

Can you explain what the NVIDIA GB300 NVL72 is and its significance from Vertiv’s perspective?

MO: The NVIDIA GB300 NVL72 is a comprehensive platform encompassing compute, network, and storage optimized for accelerated compute in enterprises. It’s a unit of compute, much like a laptop was historically, but designed for AI at rack and row scale. Vertiv provides the power, cooling, and control framework around this platform, allowing it to operate efficiently. The GB300 NVL72 is a gold standard for AI clusters, optimized at the server, rack, and system levels. Our role is critical in enabling this platform to deliver maximum performance for AI workloads.

Beyond liquid cooling, what other value does Vertiv bring to NVIDIA’s AI infrastructure, particularly for the GB300 NVL72?

MO: While liquid cooling is pivotal—transitioning to 80% liquid and 20% air cooling for energy savings—our value extends further. We lead in the entire powertrain, from grid to chip, with solutions like battery energy storage systems and microgrids to manage grid interactions. We also manage the thermal chain, from chip to heat reuse.

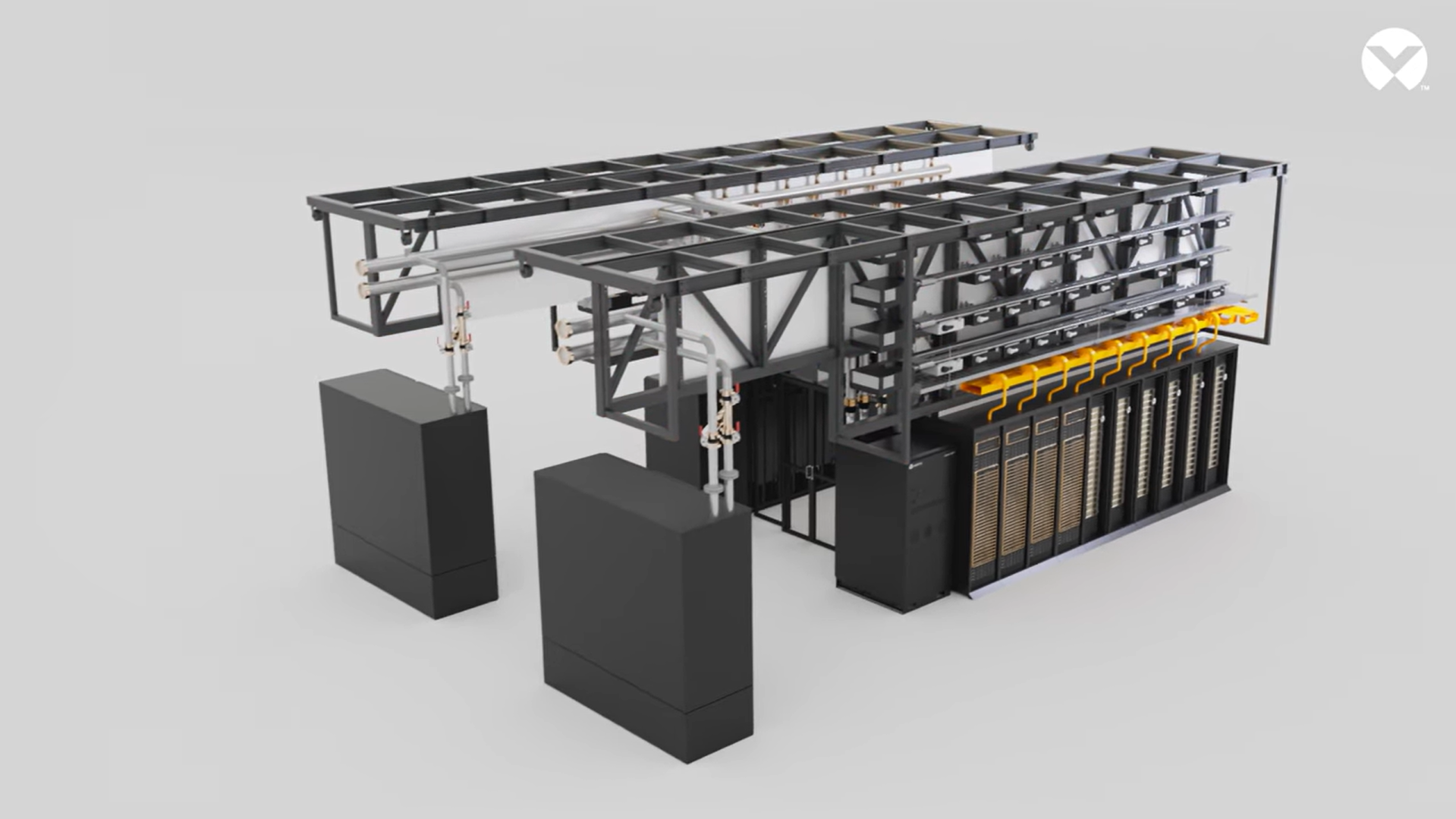

Our orchestration software integrates power and thermal systems for optimal performance. Additionally, we offer flexible deployment models, from fully prefabricated modular data centers to pre-integrated skids, reducing risk and accelerating deployment for large-scale AI systems like the GB300 NVL72.

3D rendering of a high-density AI infrastructure engineered for the NVIDIA GB200 platform and scalable for GB300. It supports 1.4 MW load across 20 DGX-optimized racks, with headroom for next-gen workloads and features a modular design with Vertiv™ SmartRun—a prefabricated overhead system for integrated cooling, end-to-end power, and cable management.

How does Vertiv leverage NVIDIA’s Omniverse platform in this partnership?

MO: We use NVIDIA’s Omniverse to create physics-based, photorealistic digital twins of our reference architectures, including those for the GB300 NVL72. This enables real-time collaboration with architects, engineers, and customers, allowing rapid design iteration and validation before deployment. The digital twin simulates infrastructure performance, testing variables and failure scenarios virtually, which saves time and de-risks projects. This capability extends into operations, providing closed-loop optimization and feed-forward controls to continuously enhance system performance, making it a dynamic tool for smarter infrastructure decisions across the lifecycle.

What future areas of collaboration do you foresee with NVIDIA, especially regarding enterprise adoption and emerging AI technologies?

MO: As AI evolves, we’re moving from training to inference, where monetization happens through enterprise applications like radiology or banking. This shift will create a distributed AI ecosystem, with smaller models deployed on-premises, in colocation, or at the edge, driven by data security, sovereignty, latency, and integration needs. Our collaboration with NVIDIA will focus on supporting this distributed landscape, leveraging Omniverse for design and optimization. We’ll also address emerging areas like agentic AI and physical AI, allowing our infrastructure to support enterprise adoption across diverse sectors and geographies, scaling from centralized AI factories to edge deployments.

Blueprint for the AI era

Vertiv’s work with NVIDIA marks a shift from custom builds to scalable, repeatable infrastructure for AI. Reference designs like the GB300 NVL72 set a new standard—integrating power, cooling, and orchestration into a unified system. As AI moves from core to edge, this partnership is shaping how the next generation of compute gets deployed.

Explore what’s next in AI infrastructure: Vertiv AI Hub: Transforming Modern Data Centers To Support AI and HPC Demands