"We’ve gotten to the point where we’ve exhausted the ability to reject the heat effectively using air, and we’ve had to go to liquid-cooled servers. So we’ve got cold plates inside the servers; you can see the server footprint has come down significantly, so I can now pack more into a rack."

Steve Madara, Vertiv VP Global, Thermal, Data Centers

For the third week of DCD’s AI Week, Vertiv VP Global, Thermal, Data Centers Steve Madara shared the ongoing trends, challenges, and solutions for thermal resilience in the modern data center industry. He emphasized the importance of flexible, scalable solutions for meeting the rapid changes in requirements for adopting AI, increasing resource-use efficiency, and preventing performance throttling.

Steve’s presentation included the current trends that large colocation and cloud providers are demanding compared to enterprise operators' priorities. Key topics in the discussion below include mission-critical secondary fluid networks, the potential of two-phase cooling, temperature optimization benefits, and the upcoming disruptions in heat reuse and energy sourcing.

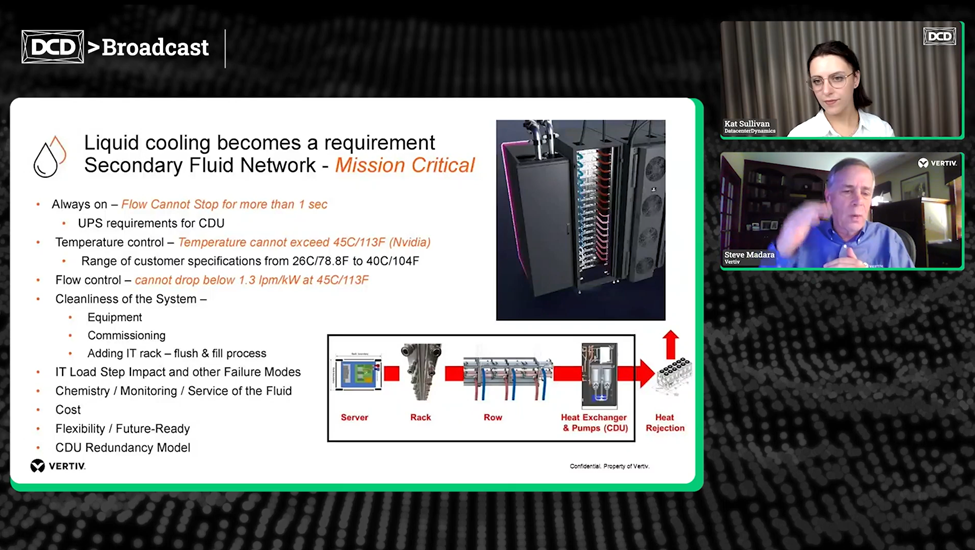

Let’s start with the term “mission critical.” You talk about this in a variety of ways that it's already used in the industry, but then we bring it in with aspects of the secondary loops. What is top of mind when it comes to risk or design that customers should be planning for? And why, when we talk about “mission critical,” is that the important aspect we're surrounding the risks and design considerations around?

Steve Madara (SM): Data centers have always been considered mission-critical. But in thermal systems, we previously had some "cushion” during failures. If you lost utility and went to generators, most data centers could ride through a short disruption.

With secondary fluid loops, it’s different. The fluid cannot stop flowing for more than a second. That makes these systems “ultra mission-critical”, no different than our IT power supply. Key considerations include:

- Maintaining continuous flow and minimum flow rates.

- Keeping temperatures stable (typically below 45°C).

- Monitoring fluid chemistry and maintaining cleanliness.

- Facilitating redundancy and power backup for pumps and CDUs.

Regarding flow rate, what does a five-time increase in flow rate mean operationally for data centers?

SM: It depends on how fast you adapt to the new technologies as they roll out, and how quickly or how often you do the refresh cycles, which will drive what you’ll get to at that point in the future.

The market can go two different ways: we’re going to see the hyperscale players in a lot of their AI factories push the envelope for the newest technology, the largest scale, and that’s going to be the design. And then you have the smaller AI deployments, more around the enterprise zones—that could be at 500 kW, the 1 MW, maybe the 2 MW—as opposed to the 20-50 MW scale that we may see in these AI factories.

It’s significant. Current deployments already show higher liquid ratios, and future systems may be 95% liquid-cooled. With increasing GPU density and liquid ratios, we could see ten-fold increases in required fluid flow over time.

Operational impacts include:

- Larger pipe sizing.

- Higher pump capacity requirements.

- Potential trade-offs between flow rate and temperature.

Is there any concern that these increases could become unsustainable?

SM: I think we have enough creative juices and brain trust within the industry to figure out how to protect customers making through these transitions. We figured out how to make air cooling work better as an industry. I think there’ll be a lot of innovation over the next few years as we learn how to apply these AI systems in these AI factories.

During that transition, what challenges should customers expect in three to five years?

SM: Vertiv has worked with liquid cooling for 20 years in other applications, so we’re very familiar with its capability. Two-phase has a lot of advantages, and either way you get more capability with a two-phase liquid:

- Higher heat capacity per area due to phase change.

- Potential for lower flow rates and higher energy efficiency.

However, the challenge with two-phase is that it’s different than single-phase, not to say it’s more complicated. The market is heavily invested in single-phase infrastructure today, with water or PG-25 as the standard. But while there’s been testing, the industry has been deploying fast for this first round of AI machines with single-phase fluids. The challenge of moving to a two-phase (system) would require major infrastructure changes.

Again, there will be a lot of innovation that will take place, extending single-phase capacity. There may be some tradeoffs in temperatures and flow rates. We might get creative with the GPUs that need the two-phase cooling because of the density, single-phase to the rack and an in-rack CDU, then converting to two-phase at the rack or cold plate level. Full two-phase adoption is unlikely in the next five years, but some early hybrid deployments may appear.

What are the biggest challenges going to be for the customers? What are the aspects they don’t need to address now, but that they will have to think about three to five years down the line?

SM: Giving credit to NVIDIA here, there’s been a rate of change happening. They’ve done a good job collaborating with the partners within the industry. Looking at the roadmaps out at five years, we can support from an infrastructure side—thermal, power, and other pieces of the infrastructure—they’ve been communicating well with the end users and the colo providers on what the future will look like years out, which has helped us formulate, innovate, and build the solutions in time for those AI and technology deployments.

Reflecting on the last 18 months, what have been the biggest disruptors accelerating innovation? What are the benefits of that, and is it hindering the market in any way?

SM: To back track a bit, there’s been a lot of technology developed over the last couple of years in support of the installations that are taking place now in the last 18 months. At the same time, there’s been a huge learning curve on how to do these deployments. We learned how to do the piping and commissioning practices for liquid-cooled systems, the services, and how to do the flushing and filling of these systems.

As an industry, we didn’t have a lot of best practices in full and in place for these deployments. Now, the next phase is optimization—raising fluid temperatures, reducing peak PUE, and recovering and leveraging that stranded capacity and reuse it on the IT.

You mentioned raising fluid temperatures reduces mechanical load. Can you quantify the benefits in terms of PUE and recoverable power for IT?

SM: It may not sound like big numbers, but in terms of some of the early steps and getting comfortable with it, we can recover 15–20% of mechanical power and reallocate it to IT. That’s about 10% of the total IT piece, but that’s still pretty significant if we know how to do it.

For the annualized PUE (annualized energy consumption), as we move to liquid and move temperatures up, we get better economization opportunities. We’re going to see huge gains by the 20-25% range on annual energy reductions. Even small percentage gains translate into major efficiency and capacity benefits.

What’s the next big disruption driven by AI within the liquid cooling space that we should prepare for over the next few years? What do you think is the next big thing? We’ve seen power that’s impacted the industry, we’ve seen cooling that’s been a reactor. What’s the next big response to the demand?

SM: The big thing on the power side has been power availability at a lot of these locations. There’s been a lot of discussion on alternative energy and what we need to do. On the thermal side, it’s been on heat reuse: How do I take all the heat generated and make it useful? In some locations, the challenge is you can only heat so many homes and swimming pools. Europe has advantages because of the proximity and district heating.

The crown jewel could be, how can I use that energy and generate electricity? We have some creative ways, and we’ve been doing a lot of research. It’s going to take some small-scale solutions to reuse the energy. We have a solution that can reduce the peak power by about 5% on a unit, which doesn’t sound like a big number. But if we go back to those charts, 5% equates to some other numbers that allow for some recovery. So I think there will be a lot of innovation and discussion on how we apply some of that.

There’s also a lot of discussion in the market today about using gas turbine generators and the heat off that, and going into absorption chillers. We have to fine-tune what that means for data center design, but I think there are opportunities for that.

Watch the full presentation and conversation: Engineering thermal resilience for next-gen workloads