AI infrastructure imperatives: Reimagine solutions for critical AI workload challenges

Decision makers must address six essential infrastructure imperatives for enterprises, AI factories, and data centers to support HPC/AI demands and functions.

Download the ebookUnlock your solution

While data centers have adapted to many technological shifts over the past 40 years, AI presents unprecedented challenges that require a complete rethink of the data center. From skyrocketing power needs to the adoption of liquid cooling, every aspect of IT infrastructure is being pushed to its limits by AI.

The ebook “Infrastructure Imperatives: Solving Critical Challenges for Managing AI Workloads” delivers actionable insights to keep up with the pace of AI innovation and streamline AI factories' infrastructure needs, including:

- How to adapt to AI demands: Gain clarity on the unprecedented changes AI brings to data center infrastructure, how AI is reshaping design approaches, and why traditional strategies can’t keep up.

- Powering the AI progress: Address the growing energy needs of AI-driven GPUs, which consume up to 10x more power than traditional IT workloads. Learn strategies for managing variable power loads, minimizing risks, and ensuring scalability.

- Cooling that goes beyond air: Traditional cooling methods fall short in AI environments. Dives into the critical role of liquid cooling, offering guidance on delivery, management, and integration with air-cooling systems to protect high-density AI servers and investments.

- Building racks for tomorrow’s workloads: AI requires power-to-rack ratios far beyond today’s standards. Get insights on designing intelligent, resilient racks that meet the demands of high-density computing and integrated cooling systems.

- Holistic system management: Silos are out. See why integrated, scalable management platforms are crucial for AI environments, offering real-time visibility and advanced controls to prevent costly downtime.

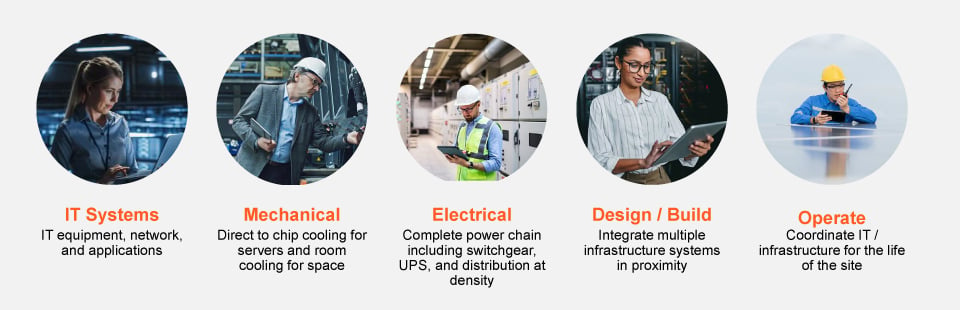

Figure 1. Implementing AI data centers will require a multidisciplinary approach, bringing together previously siloed and isolated roles to streamline efficiency and innovation from design to operations.

- Reliable support services: AI infrastructure requires specialized maintenance and optimization. Learn how to leverage experienced services and ongoing training to enable uninterrupted performance.

Table 1. A summary of the AI infrastructure imperatives to support the implementation of AI factories.Critical infrastructure Imperatives Cooling Liquid cooling becomes a requirement. Power From chip to grid, the power train must account for the growing and unique GPU load profiles. Services Specialized service expertise is required to maintain an AI environment. Rack The IT rack must allow for high-density power and cooling. Design Design power and cooling as an integrated system. System management Monitoring and management systems must be holistic.

“We have seen an immense investment in valuable next-generation computing chips and server systems, much of it related to AI. To get the most out of these assets, our customers will need them to run 24/7. Creating a resilient IT infrastructure has always been a priority, but in the future resilience will be more important, and more complex, than ever.”

Chief Technology Officer and Executive Vice President, Vertiv

The ebook is crucial for IT leaders, data center practitioners, and infrastructure professionals tasked with scaling their operations to meet AI demands. It also includes examples of real-world challenges and best practices, insights from industry leaders, and planning frameworks and roadmaps accompanying solution recommendations.

Understand the latest technological and system management solutions tailored for AI, and learn how to design and deploy scalable, resilient, and future-ready infrastructure.