CoreWeave and its partners have delivered the world’s first cloud deployment of NVIDIA’s GB300 NVL72 system, driving faster training, lower latency, and more efficient infrastructure for real-time AI.

An acclaimed orchestra is not merely a collection of world-class musicians; it is a singular entity where every section (brass, strings, percussion) is precisely timed and tuned to deliver a flawless performance.

When it comes to AI infrastructure, the same principles apply. Mission-critical AI performance isn’t just about adding more GPUs. It’s about coordinating compute, power, cooling, and networking so they operate as one system, one unit.

That’s exactly what Vertiv, CoreWeave and its partners are planning with the NVIDIA GB300 NVL72 system. This isn’t a concept or a custom one-off. It’s unified infrastructure built for AI at scale.

A new standard for AI infrastructure

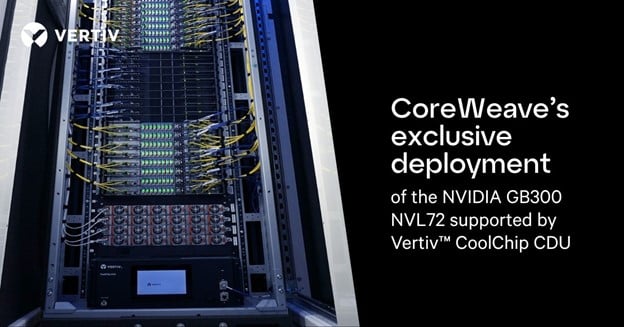

CoreWeave, the AI Hyperscaler for GPU and cloud computing, is conducting the first deployment of the NVIDIA GB300 NVL72 housed within Dell’s integrated rack system. (Explore the architecture)

CoreWeave has been at the forefront of AI cloud infrastructure, with previous deployments of NVIDIA HGX H100 and H200 platforms. Its NVIDIA GB300 NVL72 deployment continues that trend.

The performance gains speak for themselves:

- Up to 50× increase in inference output for reasoning workloads vs. Hopper

- 10× improvement in user responsiveness

- 5× more throughput per watt

- 1.5× more FP4 (4-bit floating point) performance vs. Blackwell

- 1.5× more memory for up to 21TB of GPU memory per rack vs. Blackwell

- 2× NVIDIA Quantum InfiniBand bandwidth per GPU compared to previous-generation systems

Infrastructure that scales with silicon

To run systems at this scale, cooling and power have to be designed just as deliberately as the compute. At full load, each NVIDIA GB300 NVL72 rack will draw ~140 kW, and is liquid-cooled. This is where Vertiv’s contribution is key.

Vertiv™ CoolChip cooling distribution units (CDUs) are purpose-built for these conditions. The system delivers:

- 121kW of liquid-to-liquid heat rejection ideally sized and optimized for the NVIDIA GB300 NVL72 cabinet

- Compatibility with ASHRAE W5 inlet water temperatures

- Redundant pumps and dual heat exchangers for fault tolerance

- Automated loop control for steady-state operation

Vertiv’s capabilities also include services that streamline deployment and optimize efficiency, including:

- CFD (Computational Fluid Dynamics) modeling and thermal simulation to optimize airflow and avoid late-stage redesigns

- White space and integration planning to align cooling, power, and loop control with rack layout

- Real-time monitoring and loop control to stabilize performance under dynamic GPU loads

- Reference designs and SimReady assets for NVIDIA GB200 and GB300 systems, enabling digital validation before build

Front view of an NVIDIA GB300 NVL72 rack deployed by CoreWeave, showcasing high-performance GPU infrastructure supported by Vertiv™ CoolChip CDU (Coolant Distribution Unit) liquid cooling system designed to handle extreme thermal loads. (Photo courtesy of Switch)

Executing AI at speed—together

Delivering next-generation AI infrastructure isn’t just about what’s inside the rack. It’s about moving rapidly from design to deployment, and how closely your partners are aligned throughout that process.

CoreWeave’s rollout of the NVIDIA GB300 NVL72 shows that process in practice: a collaborative effort to bring a rack-scale AI platform online, built for ~140kW loads, liquid cooled, and dense, always-on inference workloads.

As CoreWeave, using a sporting rather than musical analogy, puts it:

"Pioneering the future of AI infrastructure is a team sport. Our joint collaboration with Dell, Switch, and Vertiv on this deployment of the NVIDIA GB300 NVL72 is fundamental to our speed and agility, providing the critical support we need to turn groundbreaking technology into a reality for our clients at an unprecedented pace."

Vertiv supported the deployment with pre-validated liquid cooling systems, and engineering expertise, giving CoreWeave and Dell the infrastructure alignment needed to move at lightspeed.

Where infrastructure is headed

The deployment of the NVIDIA GB300 NVL72 by CoreWeave and Dell with support from Vertiv, marks a shift in how AI infrastructure is designed and delivered. Given the rate of change in AI, a more appropriate comparison might be electronic music rather than classical. But whatever form it takes, the analogy of a united orchestra or group – rather than an assembly of solo players – still stands. True integration, cooperation and co-development are critical for a symphonic AI performance.

Discover how to integrate power, cooling, and compute for optimal AI performance on Vertiv AI hub.