High-density AI workloads are reshaping thermal strategies, and hybrid cooling is emerging as the foundation for scalable, efficient data center infrastructure.

AI is no longer a future trend. It is a present force redefining how data centers are built, powered, and cooled. This evolution is creating pressure points across every layer of infrastructure.

The IDC's Data Center Vision research projects that global IT AI hardware spending will climb from $72 billion in 2024 to $258 billion by 2028. The racks supporting this surge are becoming significantly denser with some hyperscale deployments reaching 130 kW.

This surge in density introduces a new set of cooling dynamics. With rack power profiles changing rapidly, operators need adaptive, efficient AI cooling strategies that can scale with compute demand.

Hybrid cooling provides a practical path forward, not as a replacement, but as a strategic enhancement that helps data centers manage rising thermal loads with more precision and flexibility.

The growing case for flexible cooling

According to AFCOM’s 2024 State of the Data Center report, 38% of operators say their current cooling solutions are inadequate, and 20% are actively seeking more scalable alternatives.

More than 80% of IT leaders now view generative AI as a strategic workload that requires new investments in power and cooling, according to IDC.

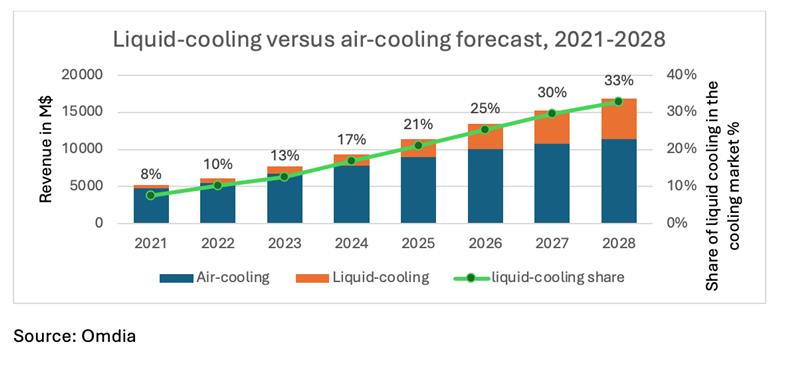

This trend is already reshaping the market. Omdia’s forecast indicates that liquid cooling will grow from 8% of the cooling market in 2021 to 33% by 2028. While air cooling remains essential, these numbers signal a broader shift toward more flexible, high-capacity thermal solutions. (See Figure 1)

Figure 1. Liquid cooling grows to meet high-density demands, while air cooling remains vital for traditional IT, underscoring the need for hybrid solutions. Source: Omdia, June 2024

The results are not an endorsement of Vertiv. Any reliance on these results is at the third party’s own risk.

Higher rack power is only part of the equation. The bigger challenge is planning for an uncertain future. Many organizations are still evaluating how and when to scale AI, which makes long-term infrastructure decisions more complex. Cooling systems must be flexible enough to support current workloads while accommodating future shifts—whether that involves liquid cooling, air cooling, or a combination of both.

How hybrid cooling aligns with AI deployment

With a mix of liquid and air-cooled IT equipment, operators are looking for solutions that can effectively combine the two technologies into hybrid cooling systems. Hybrid cooling deployments combine the benefits of both liquid and air cooling into a single optimized system, providing high cooling capacity and efficiency. Customizing the mix of liquid and air provides increased flexibility in deployment strategies. This flexibility supports the performance and efficiency demands of AI without locking infrastructure into rigid designs.

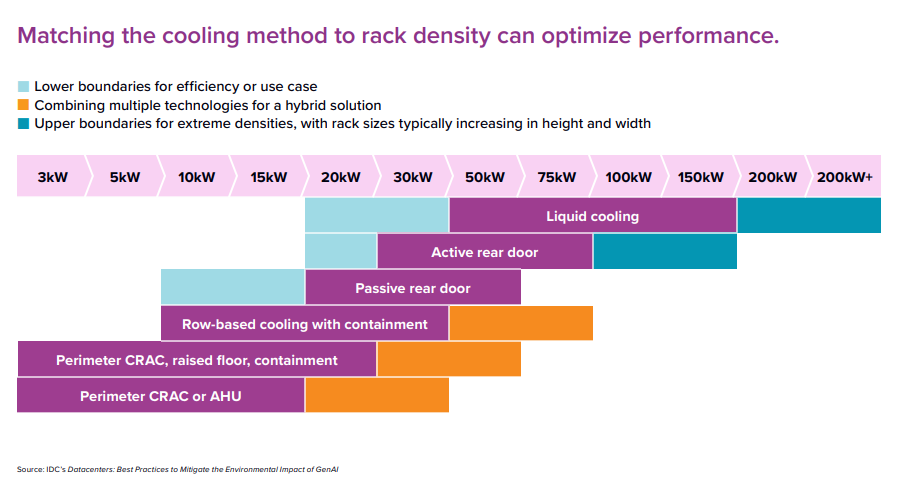

Modern data centers need cooling systems that are as dynamic as the AI workloads they support. Effective thermal management requires methods tailored to specific rack densities. In lower-density environments, air cooling remains practical and efficient. But as racks approach or exceed 50kW, especially in GPU-intensive AI training, liquid cooling becomes essential for managing core heat loads. Even in these high-density scenarios, air cooling still plays a supporting role, typically accounting for around 20% of the thermal solution. (See Figure 2)

Figure 2. Cooling method recommendations by rack density, showing air cooling for traditional IT (3-30kW) and liquid cooling for high-density AI workloads (50kW+), with hybrid solutions bridging the gap.

Source: IDC Data Center Vision, March 2025

High-density deployments often implement hybrid strategies that pair direct liquid cooling with technologies such as rear-door heat exchangers or active rear doors. These configurations support precise thermal management and support densification without compromising performance or efficiency.

Learn more

High-density cooling: A guide to advanced thermal solutions for AI and ML workloads in data centers

The evolution of artificial intelligence (AI) and machine learning (ML), mainly through technologies like ChatGPT and large language models (LLM), has heightened the demand for high-performance computing (HPC).

What it takes to cool tomorrow’s infrastructure

Uptime Institute’s 2024 research shows that data centers originally built to support 7-8 kW racks now face demands of 30 kW or more. In AI deployments, it’s not uncommon to see racks running consistently above 100 kW.

This rise in density does not mean traditional IT workloads are going away. Lower-density racks will continue to be deployed across enterprise and colocation environments. However, the growth rate for AI deployments and the associated thermal intensity are significantly higher.

Cooling systems must now support a broader range of rack densities, respond to mixed deployments of liquid-cooled and air-cooled IT in the same spaces, and scale quickly as demands grow. The most effective hybrid cooling strategies offer several operational advantages that align with these requirements:

- Adaptability: The ability to support both air and liquid cooling in mixed environments has become a key decision factor for operators deploying AI-ready infrastructure. This approach enables faster deployment and offers flexibility without the need for full-scale retrofits.

- Interoperability: Hybrid cooling requires seamless coordination across all thermal assets. Effective systems synchronize mixed cooling architectures, maintain balanced performance between air-cooled and liquid-cooled racks, and automatically adjust free cooling operation modes based on real-time ambient conditions to maximize energy efficiency.

- Scalability: Modular designs support fast, repeatable deployments that grow seamlessly alongside AI workloads. This system makes it easier to manage mixed cooling environments with standardized architectures.

- Serviceability: Hybrid cooling requires specialized protocols for liquid cooling implementation, including end-to-end expertise in fluid management and certified lifecycle maintenance. This comprehensive approach maintains reliable operations across diverse cooling environments.

Many operators are now focused on extending the flexibility of existing infrastructure rather than replacing it entirely. Solutions like Vertiv™ CoolPhase Flex Packaged System demonstrate these capabilities in action. The unit can be deployed as either an air-cooled or liquid-cooled system that can be converted in the future as business demands change. This gives operators a practical way to scale thermal performance as AI requirements increase.

Designing for flexibility at scale

AI’s rapid adoption is prompting operators to rethink data center infrastructure, with a growing emphasis on modularity, integrated cooling, and deployment speed. Supporting both traditional IT applications and high-density AI workloads in the same facility requires infrastructure that can adjust without extensive retrofits.

Compass Datacenters is putting this approach into action. The company, which designs and builds large-scale data center campuses, collaborated with Vertiv on a hybrid-ready cooling strategy. The strategy was implemented through a system that supports both air and liquid cooling in a single unit. This gave Compass a straightforward way to introduce liquid cooling and avoid costly retrofits as customer requirements changed.

Unlock AI’s full capabilities with hybrid cooling

Hybrid cooling is emerging as a key strategy for data centers adapting to AI. Racks run hotter. Workloads shift faster. Cooling systems need to respond with more precision. Hybrid systems allow operators to use air and liquid based on the specific demands of each deployment. They support higher densities, reduce upgrade costs, and help maintain efficiency as infrastructure expands. Flexibility at this level will be crucial as AI deployments continue to grow across different environments.

Discover how hybrid cooling transforms heat management for AI data centers from rack-level efficiency to chip-scale innovations. Download the white paper here: The Future of Cooling